T-DEED: Temporal-Discriminability Enhancer Encoder-Decoder for Precise Event Spotting in Sports Videos

CVsports Workshop at CVPR, 2024

Artur Xarles , Sergio Escalera , Thomas B. Moeslund , and Albert Clapés

University of Barcelona, Computer Vision Center, and Aalborg University

Abstract

In this paper, we introduce T-DEED, a Temporal-Discriminability Enhancer Encoder-Decoder for Precise Event Spotting in sports videos. T-DEED addresses multiple challenges in the task, including the need for discriminability among frame representations, high output temporal resolution to maintain prediction precision, and the necessity to capture information at different temporal scales to handle events with varying dynamics. It tackles these challenges through its specifically designed architecture, featuring an encoder-decoder for leveraging multiple temporal scales and achieving high output temporal resolution, along with temporal modules designed to increase token discriminability. Leveraging these characteristics, T-DEED achieves SOTA performance on the FigureSkating and FineDiving datasets.Motivation

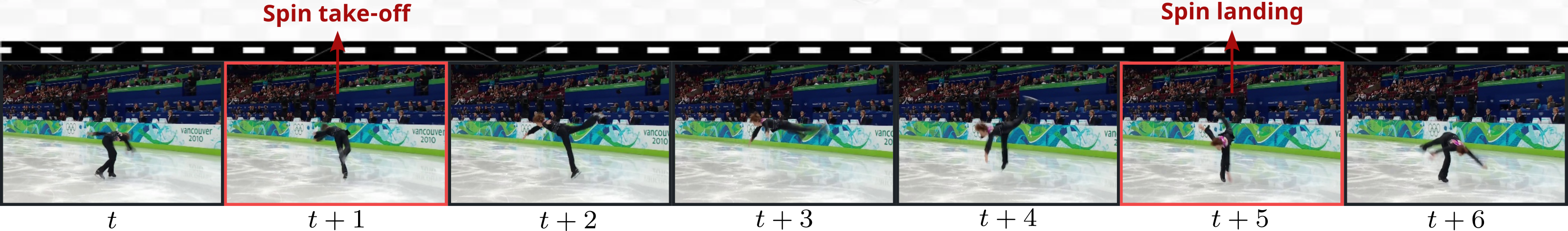

A depth examination of the Precise Event Spotting (PES) task in sports videos highlights the challenge of generating accurate temporal predictions. To improve prediction precision, this paper investigates various approaches for modeling temporal information, focusing on creating discriminative frame representations. This discrimination helps to facilitate precise temporal predictions and minimizes confusion between spatially similar concurrent frames. As illustrated in the following figure, the SGP module is the best in terms of discriminability among representations, thereby proving its effectiveness in PES tasks. Additionally, we explore the importance of employing multiple temporal scales for detecting actions that require different temporal contexts. By combining the need for discriminative representations with the use of multiple temporal scales, T-DEED introduces the SGP-Mixer module, addressing both critical aspects of the task.

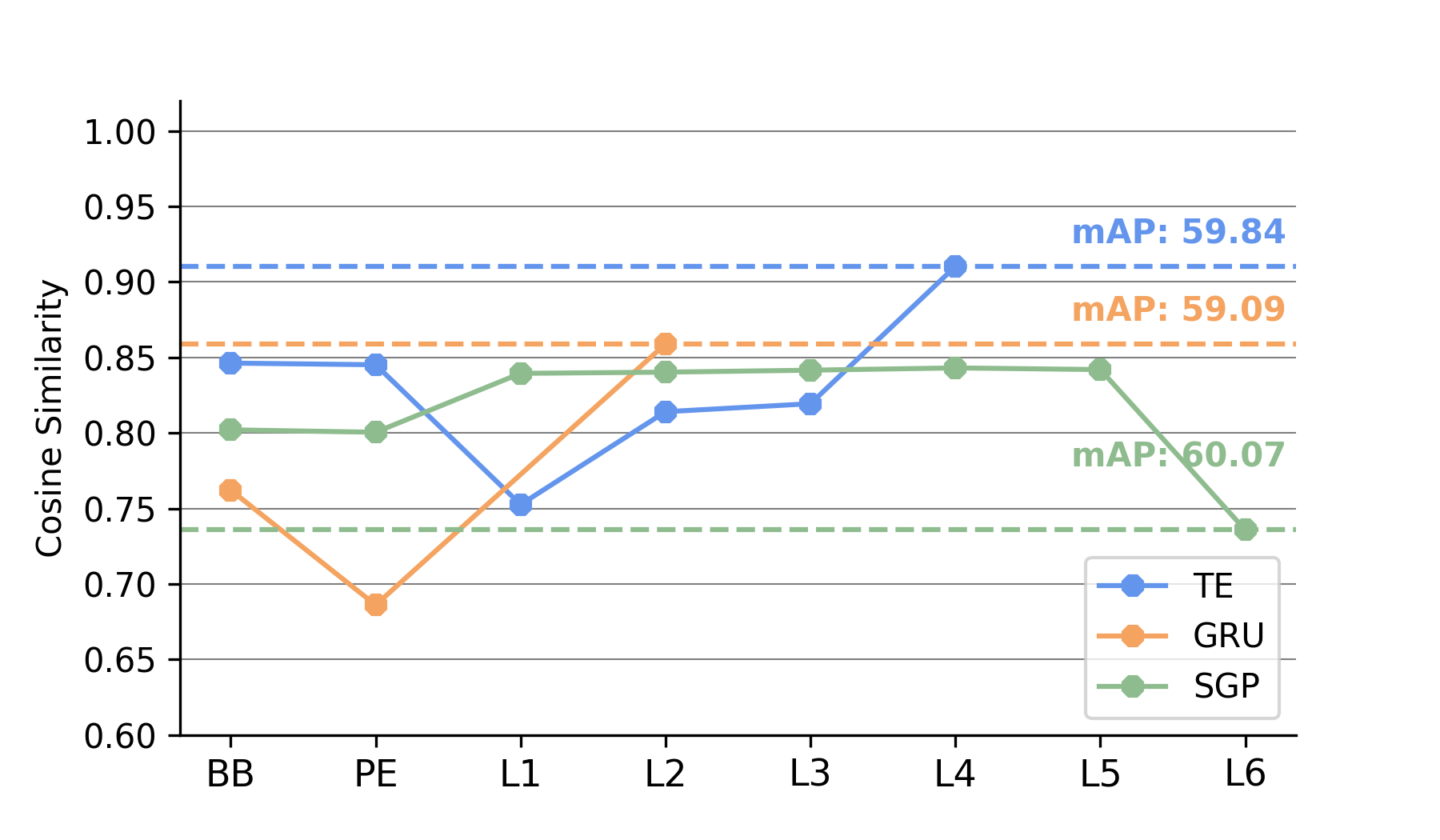

Architecture

Our model, Temporal-Discriminability Enhancer Encoder-Decoder (T-DEED), is designed to increase token discriminability for Precise Event Spotting (PES) while leveraging multiple temporal scales. As illustrated in the following Figure, T-DEED comprises three main blocks: a feature extractor, a temporally discriminant encoder-decoder, and a prediction head.

We process videos through fixed-length clips, each containing \( L \) densely sampled frames. The feature extractor, composed of a 2D backbone with Gate-Shift-Fuse (GSF) modules, handles the input frames, generating per-frame representations of dimension \( d \), hereby referred to as tokens. These tokens undergo further refinement within the temporally discriminant encoder-decoder. This module employs SGP layers which -- as shown by Shi et al. -- diminish token similarity, thereby boosting discriminability across tokens of the same sequence.

The encoder-decoder architecture allows the processing of features across diverse temporal scales, helpful for detecting events requiring different amounts of temporal context. We also integrate skip connections to preserve the fine-grained information from the initial layers. To effectively merge information proceeding from varying temporal scales in the skip connections, we introduce the SGP-Mixer layer. This layer employs the same principles as the SGP layer to enhance token discriminability while gathering information from varying range temporal windows.

Finally, the output tokens are directed to the prediction head, resembling those commonly used in Action Spotting (AS) literature. It encompasses a classification component to identify whether an event occurs at the given temporal position or in close proximity (within a radius of \( r_{E} \) frames). Additionally, for the positive classifications, a displacement component pinpoints the exact temporal position of ground truth events.

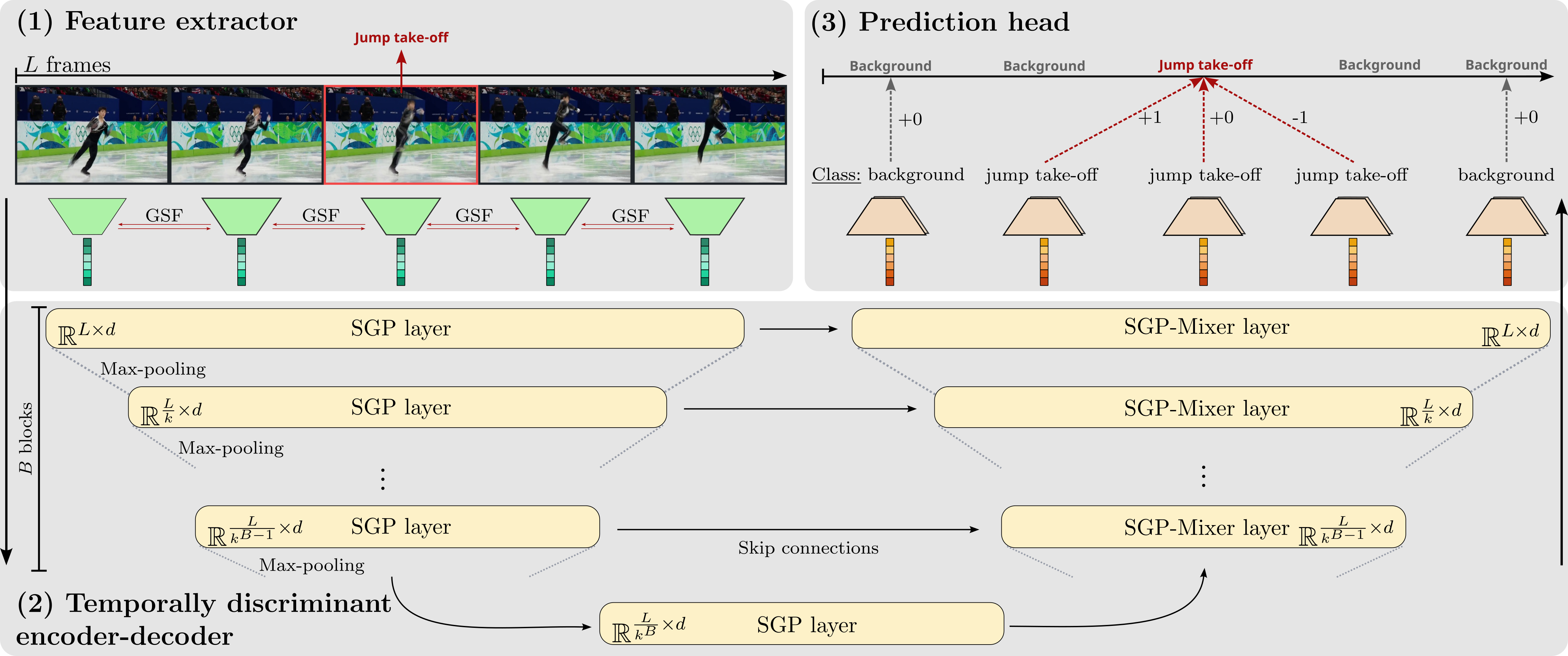

Qualitative results

Here we present qualitative results for the FineDiving dataset.

BibTeX

title={T-DEED: Temporal-Discriminability Enhancer Encoder-Decoder for Precise Event Spotting in Sports Videos},

author={Xarles, Artur and Escalera, Sergio and Moeslund, Thomas B and Clap{\'e}s, Albert},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month={June},

year={2024},

pages={3410-3419}

}