ASTRA: An Action Spotting TRAnsformer for Soccer Videos

ACM Workshop on Multimedia Content Analysis in Sports, 2023

Artur Xarles , Sergio Escalera , Thomas B. Moeslund , and Albert Clapés

University of Barcelona, Computer Vision Center, and Aalborg University

Abstract

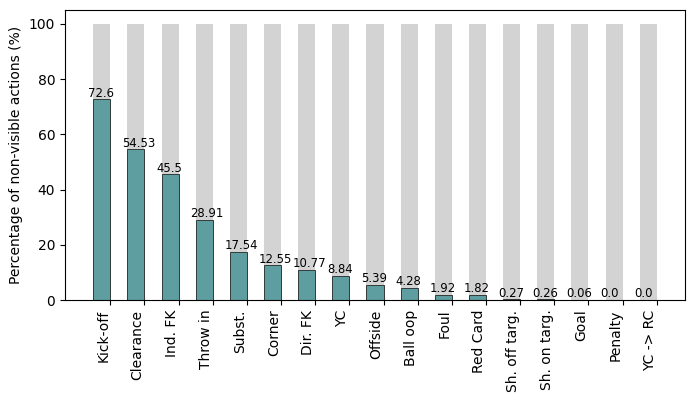

In this paper, we introduce ASTRA, a Transformer-based model designed for the task of Action Spotting in soccer matches. ASTRA addresses several challenges inherent in the task and dataset, including the requirement for precise action localization, the presence of a long-tail data distribution, non-visibility in certain actions, and inherent label noise. To do so, ASTRA incorporates (a) a Transformer encoder-decoder architecture to achieve the desired output temporal resolution and to produce precise predictions, (b) a balanced mixup strategy to handle the long-tail distribution of the data, (c) an uncertainty-aware displacement head to capture the label variability, and (d) input audio signal to enhance detection of non-visible actions. Results demonstrate the effectiveness of ASTRA, achieving a tight Average-mAP of 66.82 on the test set. Moreover, in the SoccerNet 2023 Action Spotting challenge, we secure the 3rd position with an Average-mAP of 70.21 on the challenge set.Motivation

A depth examination of the Action Spotting task within soccer matches revealed four main challenges: (1) the necessity for precise predictions, given the tight tolerances while evaluating the task; (2) a long-tail data distribution, where some of the actions occur infrequently; (3) the presence of noisy labels resulting from the subjective judgment of annotators in determining temporal locations; and (4) the non-visibility of certain actions due to replays or camera angles. ASTRA was designed with dedicated modules tailored to address each of the challenges.

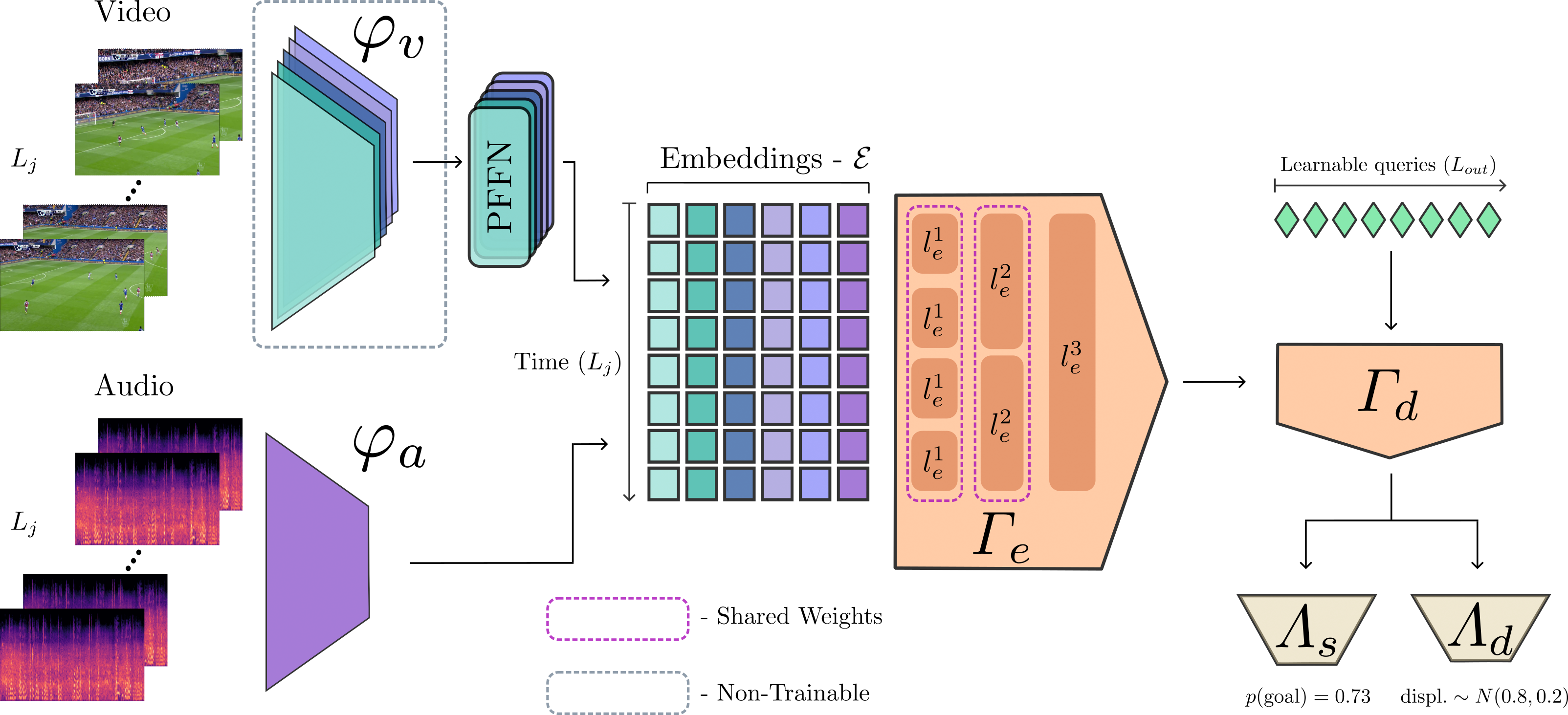

Architecture

Our solution, ASTRA, leverages embeddings \( \mathcal{E} \) from multiple modalities to achieve its goals. Specifically, ASTRA is built upon \( | \mathcal{E} | - 1 \) pre-computed visual embeddings, complemented by an additional audio embedding derived from the log-mel spectrogram of the audio using a VGG-inspired backbone. The network responsible for generating this audio embedding is jointly trained with the ASTRA model. The embeddings are input to the model in clips spanning a duration of \( T \) seconds. These features from each backbone are processed in parallel streams, where Point-wise Feed-Forward Networks (PFFN) project them to a common dimension \( d \). The projected embeddings are then combined in the subsequent Transformer encoder-decoder module, with learnable queries in the decoder. Inspired by the architecture proposed in DETR, this module enables ASTRA to handle different input and output temporal dimensions \( L_{\text{in}} \) and \( L_{\text{out}} \), respectively, and facilitates a straightforward fusion of multiple embeddings. To enhance ASTRA's ability to capture fine-grained details, we introduce a temporally hierarchical architecture for the Transformer encoder. This architecture enables the encoder to attend to more local information in the initial layers and reduces the computational cost. Finally, ASTRA employs two prediction heads to generate classification and displacement predictions for the anchors introduced by Soares et al. These anchors correspond to specific temporal positions and class actions, as described in their work. Additionally, we adopt their suggestion of employing a radius for both classification and displacement \( r_{c} \) and \( r_{d} \), respectively, to define the temporal range around a ground-truth action within which it can be detected. Furthermore, to account for label uncertainty, ASTRA adapts the prediction head responsible for displacement by modeling them as Gaussian distributions instead of deterministic temporal positions. This allows ASTRA to capture temporal location uncertainty and provide a more comprehensive representation of the actions. Additionally, ASTRA incorporates a balanced mixup technique to improve model generalization and accommodate the long-tail distribution of the data.

BibTeX

@inproceedings{xarles2023astra,

title={ASTRA: An Action Spotting TRAnsformer for Soccer Videos},

author={Xarles, Artur and Escalera, Sergio and Moeslund, Thomas B and Clap{\'e}s, Albert},

booktitle={Proceedings of the 6th International Workshop on Multimedia Content Analysis in Sports},

pages={93--102},

year={2023}

}

title={ASTRA: An Action Spotting TRAnsformer for Soccer Videos},

author={Xarles, Artur and Escalera, Sergio and Moeslund, Thomas B and Clap{\'e}s, Albert},

booktitle={Proceedings of the 6th International Workshop on Multimedia Content Analysis in Sports},

pages={93--102},

year={2023}

}